One of the continuing problems philanthropists have is the endless insistence on “metrics.” There are several reasons why foundations love number-based evaluations. They seem objective, even “scientific.” They can show a board that program officers aren’t throwing money away, but supporting programs with demonstrable results.

Of course the good that philanthropy does can’t be reduced to numbers, and far too often nonprofits have to distort their missions in order to win increasingly competitive grants. A rescue mission dedicated to teaching homeless men and women how to live virtuous, Christian lives can only get money from many foundations by calculating the food and shelter they provide the destitute. For these foundations, the number of meals a rescue mission serves is more important than the number of lives they save.

The Hudson Institute’s William Schambra, in this 2012 article from Nonprofit Quarterly critical of foundation’s excessive use of “metrics,” observes that “to win funding from a knowledge-generating foundation, the nonprofit must shoehorn its real- world work into the abstract, unfamiliar jargon to which data accumulators resort when they wish to generalize across (that is, to make disappear) the varieties of particular experiences.”

In the January Wired, Felix Salmon, who blogs about finance for Reuters, has an interesting article where he points out the problems institutions face when they rely too much on number-derived analysis (or what Salmon calls “quants”).

According to Salmon, most organizations go through four stages using number-based analysis.

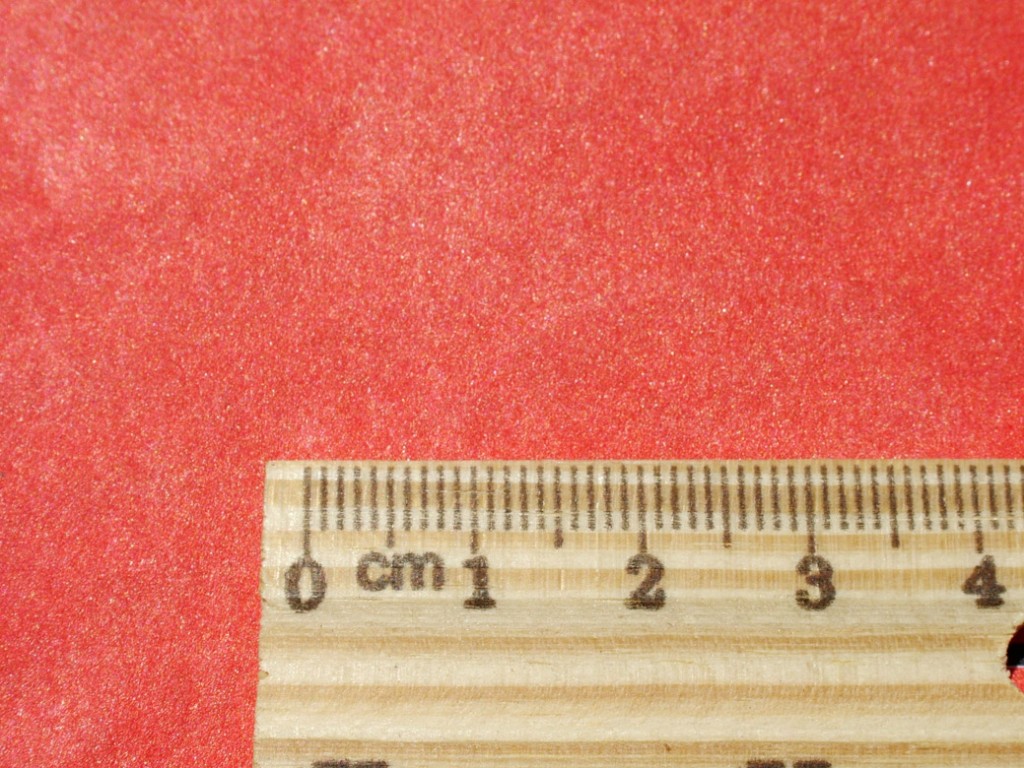

(1) Pre-disruption. Think of dating agencies before the Internet, or the era when department stores displayed their products based on what a manager liked--or even thought was aesthetically pleasing.

(2) Disruption. Remember the time in the 1980s when you started getting credit card offers based on what a computer thought you could pay? That’s when algorithms took over the credit card industry. In the film Moneyball, the moment when all the grizzled old scouts on the Oakland Athletics were given the sack dramatically displays the moment when number-happy sabermetricians took over the clubhouse.

(3) Overshoot. Organizations rely too much on numbers to answer every question and displace human judgment.

(4) Synthesis. Number-derived analysis remains an important tool but can always be overruled by human judgment.

Salmon describes an important law derived by sociologist Donald T. Campbell in the 1970s. Campbell’s Law states that “The more any quantitative social indicator is used for social decision-making, the more subject it will be to corruption pressures and corrupt the social processes it is intended to monitor.”

For example, schools that “teach to the test” are displaying the harm caused by Campbell’s Law. So are cities that grade snowplow operators on the amount of snow they remove, a rule that perversely encourages the operators to leave harmful black ice on the streets.

Salmon discusses the work of criminologist Peter C. Moskos, who teaches at the John Jay College of Criminal Justice. As part of his field research, Moskos served as a Baltimore police officer for nine months, an experience he recounts in his 2008 book Cop in the Hood. (The first chapter can be read here.)

When Moskos served as a Baltimore cop, the city was relying on a “compstat” crime-fighting model, which judged police officers solely on the number of arrests they made. Cops know there are many successful methods to fight crime, including lots of time walking their beats and building personal connections with responsible members of their communities. Statistical models measure none of this. By only encouraging police to make arrests, Moskos reports, cops often arrest drug users who have thrown away their drugs, even though the likelihood of a future conviction is extremely small.

Humans who use and adept the information computers and calculations provide, Salmon says, are more accurate than unaided computers are. Expert weather forecasters are about 25 percent more accurate than computer models. World chess champion Magnus Carlsen practices against computers all the time—which enables him to beat them more often. And now that every baseball team has statisticians, the best ones (like the Boston Red Sox) are the ones who can take statistical data and then use their best judgment about which players to acquire.

Far too many foundations are stuck in the third of Salmon’s four levels. They spend too much time on complex evaluations that at best only give an incomplete picture of whether or not a program is effective. They wrongly think that numbers are all that matter and that number-laden evaluations justify bad grantmaking.

Foundations do better if program officers exercise their best judgment, based in part (but never entirely) on what evaluations say.

“As long as the humans are in control, and understand what it is they’re controlling, we’re fine,” Salmon concludes. “It’s when they become slaves to the numbers that trouble breaks out.”

Amen and Amen!

Even now, some states and “watchdogs” would be happy if a charity spent 90% of its revenue on worthless programs.